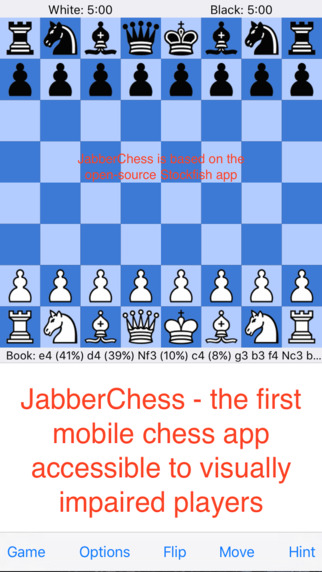

JabberChess, A Mobile Chess App Speech Recognizer for Visual and Motion Impaired Users

Jackson Chen, a high-schooler from Colorado, US has recently made available a chess application controlled by voice created with CMUSphinx. You can find it in AppStore.

It is a very interesting case of CMUSphinx used in real-life application also because Jackson has created complete report of his experience creating the chess application and shared the models he created in the process. He performed a huge work on testing the application in real-life. He compared the performance of CMUSphinx grammars, language models and he also explored recognition with commercial engine from Nuance. You could easy guess which engine provided the best accuracy, if you want to learn more, please check the full report. You can find the models here.

If you have created your application with CMUSphinx, please let us know!

OpenEars 2.5 is out

See the details here. OpenEars is now able to support speech recognition with English, Spanish, Mandarin Chinese, French, German, and Dutch.

Pocketsphinx wrappers with SWIG for Ruby and Javascript

There is a big demand in support of speech recognition API in various programming environments. Python is getting a lot of traction, Ruby is still widely used among ROR developers, then Javascript is getting more importance, even among non-web programmers. Surprisingly, Node is quite popular in robotics and embedded development these days. Then there is Java on servers and Java on Android, C# on Windows mobile and desktop, C# in mono and Unity3d, a wide-spread game platform. Then there is GStreamer multimedia framework, also quite popular due to support for various complex media processing pipelines. Last, Microsoft somehow decided to forget C# and recently invented Managed C++ for Windows Phone. Hopefully, they will forget about that beast soon.

It might be reasonable to create a specific wrapper for every case, and there are many ways to write wrappers. For example in Python you can use FFI, cython and few other technologies. But you need to take into account that wrapper is not simply a piece code to invoke methods. Language support goes with documentation on how to use the decoder in particular language, tests, performance evaluations and so on and so forth. It is very complex to maintain even simple bindings for the language, imagine if you need to support 10 of them. There are example of custom wrappers created for CMUSphinx, Pocketsphinx-Ruby based on FFI or Node wrapper for Pocketsphinx. Looking on those efforts you can see that while extremely useful, such frameworks are significantly inconsistent, making it harder to reuse C documentation and C examples, thus increasing maintenance effort to support them all.

There are very good solutions for this problem, one of them is SWIG, a wrapper generator with special API description language. With SWIG one can easily create interfaces in dozen languages and access all pocketsphinx features in a consistent way.

Recently we have implemented a support for SWIG wrappers for several languages like Ruby and Node Javascript. We also supported Python and Java bindings in SWIG for quite some time. As a result, you can get consistent native language interface in many languages:

For example, here is Ruby code:

require 'pocketsphinx'

config = Pocketsphinx::Decoder.default_config()

config.set_string('-hmm', '../../model/en-us/en-us')

config.set_string('-dict', '../../model/en-us/cmudict-en-us.dict')

config.set_string('-lm', '../../model/en-us/en-us.lm.bin')

decoder = Pocketsphinx::Decoder.new(config)

decoder.start_utt()

open("../../test/data/goforward.raw") {|f|

while record = f.read(4096)

decoder.process_raw(record, false, false)

end

}

decoder.end_utt()

puts decoder.hyp().hypstr()

decoder.seg().each { |seg|

puts "#{seg.word} #{seg.start_frame} #{seg.end_frame}"

}

And here is Javascript code

var fs = require('fs');

var ps = require('pocketsphinx').ps;

modeldir = "../../model/en-us/"

var config = new ps.Decoder.defaultConfig();

config.setString("-hmm", modeldir + "en-us");

config.setString("-dict", modeldir + "cmudict-en-us.dict");

config.setString("-lm", modeldir + "en-us.lm.bin");

var decoder = new ps.Decoder(config);

fs.readFile("../../test/data/goforward.raw", function(err, data) {

if (err) throw err;

decoder.startUtt();

decoder.processRaw(data, false, false);

decoder.endUtt();

console.log(decoder.hyp())

it = decoder.seg().iter()

while ((seg = it.next()) != null) {

console.log(seg.word, seg.startFrame, seg.endFrame);

}

});

Here is Python code:

from pocketsphinx.pocketsphinx import *

from sphinxbase.sphinxbase import *

modeldir = "../../../model"

config = Decoder.default_config()

config.set_string('-hmm', path.join(modeldir, 'en-us/en-us'))

config.set_string('-lm', path.join(modeldir, 'en-us/en-us.lm.bin'))

config.set_string('-dict', path.join(modeldir, 'en-us/cmudict-en-us.dict'))

decoder = Decoder(config)

decoder.start_utt()

stream = open(path.join("../../../test/data", 'goforward.raw'), 'rb')

while True:

buf = stream.read(4096)

if buf:

decoder.process_raw(buf, False, False)

else:

break

decoder.end_utt()

hypothesis = decoder.hyp()

print ('Best hypothesis: ', hypothesis.hypstr, " model score: ", hypothesis.best_score, " confidence: ", hypothesis.prob)

print ('Best hypothesis segments: ', [seg.word for seg in decoder.seg()])

There are two issues in using this technology. First, SWIG is not always easy for newcomers, the syntax is complex and not always easy to comprehend, however, the whole framework is extremely flexible and enables to implement very complex features like iterators. So additional effort to learn SWIG is definitely justified and we have quite some experience now, so we can help anyone interested to add support for a new language.

Second issue is that it is not always easy to support many language features, async interfaces of Node require a lot of additional work on top of C API. However, such API must be implemented as an extension of SWIG-created interface, making it easy to keep consistent API across languages and frameworks and allowing us to improve C API as well. This is the approach we took with Java on Android, I believe this approach could be successful for other languages.

A separate project node-pocketsphinx which enables simple installation of Node wrapper with npm package manager is a tiny layer above SWIG-created wrapper. You only need to provide package files and you can enjoy full decoder features of NPM Pocketsphinx module. It still misses async API which is important for Node, but we hope to add it soon.

Large varieties of use cases we meet with shared language wrappers are very helpful for C API design as it pointed above. For example, we recently changed word segment iterator C API to provide better consistency across different languages and simplified access. Hopefully, such activity will enable us to create a good stable C API for the upcoming release of CMUSphinx framework version 5.

QtSpeechRecognition API for Qt Using Pocketsphinx

It is really great to see the wide variety of APIs raising around Pocketsphinx, one recent new one is QtSpeechRecognition API implemented by Code-Q for assistive applications. This undertaking is quite ambitious, the main features include

- Speech recognition engines are loaded as plug-ins.

- Engine is controlled asynchronously, causing only minimal load to the

application thread. - Built-in task queue makes plug-in development easier and forces

unified behavior between engine integrations. - Engine integration handles the audio recording, making it easy to use

from the application. - Application can create multiple grammars and switch between them.

- Setting mute temporarily disables speech recognition, allowing

co-operation with audio output (speech prompts or audio cues). - Includes integration to PocketSphinx engine (latest codebase) as a

reference.

You can discuss features and find more details on the following thread in Qt mailing list. You can find the sources in review in qtspeech project, branch wip/speech-recognition.

The implementation already includes pretty interesting features, for example it intelligently saves and restores CMN state for more robust recognition. So let us see how it goes.